Review: ServerSafe Remote Backup by NetMass

ServerSafe is the enterprise online e-vaulting service from NetMass, Inc. that employs an agentless architecture to backup files over the internet to a secure datacenter. Backup methodologies have been evolving for many years and include practices the likes of disk-to-disk (D2D) and disk-to-disk-to-tape (D2D2T). It’s the tape part that most IT workers loathe and costs companies the most. Of course at some large scale it doesn’t make sense to pay a 3rd-party to offsite your data, leaving you to implement an offsite vaulting and retention solution of your own. It’s all about “the cloud” these days and ServerSafe floats solidly in the stratosphere.

Based on the Televaulting DS-Client platform from Asigra, ServerSafe is able to provide a robust and feature rich backup solution for Windows or Unix. To get started you need a dedicated machine to serve as the DS-Client which will connect to the NetMass datacenter and stream all the bits across the internet. This is a 3-tier architecture that comprises of a DS-System (storage array), DS-Client (backup server), and DS-User (admin console). The DS-User portion can happily live on the DS-Client system or can be installed on an administrator’s workstation for remote management. Once the DS-Client is installed and authorized to connect to the NetMass datacenter, backup sets, policies, and schedules can be created. Your data is then compressed, encrypted, and sent to the NetMass datacenter, to live on their storage arrays, where they do not cap disk usage but do charge based on how much disk you consume.

All data is encrypted before it leaves your premises on it’s travel across the internet. AES is the algorithm and the bit level depends on the key you supply during install; a 32-character key will yield 256-bit encryption which is, today, unbreakable. Your data is encrypted before it gets put on the wire and will sit on the storage array encrypted as well, in-flight and at rest. Before a DS-Client can connect to the NetMass datacenter it has to be expressly permitted, which is a cooperative effort between you and the NetMass personnel. The DS-Client is secured by local security or Active Directory accounts that combine with application-level role based permissioning.

The install consists of running an x86 or x64 installer, pointing to a NetMass-provided customer information file (CRI), installing MSDE with a new SQL instance (or pointing to another SQL server), and generating an account key for AES encryption. Once this key is created it cannot be changed without destroying the DS-Client registration and starting over from scratch. For the version 8 build of the DS-Client, only Server 2003 or XP are supported as hosts. Server 2008 can be backed up by the DS-Client but it is not yet supported as a host OS. I’m told this is coming in the v9 build.

Setup

Setup is collaborative and done via a shared desktop session with a NetMass engineer. Asigra has published a 500+ page PDF manual for this product so the options run vast and deep. Once logged into the DS-Client, the first step is to set defaults that will control the behavior of the backup sets you create later. Generations will dictate the default number of versions of a file you want to store online and this number will be pre-populated in a backup set. The manual suggests setting the total number of generations allowed to something very high, like 300, then controlling them more granularly via retention rules. You could set up a base retention rule here also but it might make better sense to set these up per server or file type you’re backing up. CDP is Continuous Data Retention which means that data can be constantly backed up on-the-fly as it changes. Depending on your generation limits and retention rules this could be very costly in both online storage costs and bandwidth usage. Defaults for scheduling and email notification are set here as well. There are two options for compression: ZLIB or LZOP. Some quick unofficial research in the Asigra forums tells me that ZLIB has slightly higher ratios. The best I’ve seen so far is a 30GB SQL database being reduced to 2GB before transmission to NetMass. The other tabs are accurately descriptive providing support for features you would expect in an enterprise product. The advanced tab allows for the tweaking of a number of low-level parameters which include compression levels, retry values, and various other settings. The parameters tab controls the Admin Process which is essentially database maintenance on the DS-Client.

Backups

Core functionality is completely wizard-driven and includes scheduled backups, On demand backups, and restores. Backup options include common file types you would be most likely to protect. You can browse the network to select target hosts or enter them by name in UNC format to connect. Once connected, the client will show you all available drives and shares that you can drill down into. The DS-Client has no trouble discovering hidden file shares ($).

For SQL Servers you need to choose the path to which the database files live and the DS-Client will display each database. Choose the databases you want to include in the backup set and then decide if you want to run DBCC and truncate the logs. If your SQL Server is running in simple recovery mode then you do not need to truncate the logs here and you will see deprecation warnings on your servers. The DS-Client uses standard Microsoft API calls and via T-SQL triggers all operations against the database. Remember this is all done agentless, so the backup client connects to SQL like a regular consumer with administrative privileges. A dump of the database has to be performed which can occur in the same directory as the DB files, a remote location, or in the DS-Client buffer (DS-Client machine). If you have the disk space, dumps in the same place as the DB are easiest. It is important to note that this can affect production performance as the DS-Client will generate additional server load in the form of IO and network traffic.

Next you can choose to use an existing retention rule, create a new one, or use none at all. While retention rules can be created separately, this is an easy way to mate online retention with backup sets as you create them. Three options are available at this time in the DS-Client, via NetMass, with regard to online retention:

- Deletion of files removed from source: how long to keep files online whose source file has been deleted

- Time-based online retention: how many generations to keep online and for how long

- Enable local storage retention: keeping generations locally in addition to online

Deletion of files if removed from source defaults to 30 days with no kept generations. This may work fine or you could keep the last changed generation of a file online even after it’s been deleted from the source, just in case. Keep in mind, however, that this will keep all files until an applicable retention rule deletes them from the DS-System.

Time-based retention dictates how long to store generations online, near and long term. This is useful for compliance requirements, especially if you work in an industry that has specific data retention requirements. You can get extremely granular with these rules which can be mind-bending to your non-technical senior management staff. For instance, a good general policy might be to protect data for up to 7 years. This essentially ensures that the last, most recent copy of every file, whether it was deleted or not, will be stored up to 7 years online. As time goes on and changes to a file slow down, its generations will slow as well. For example, following this policy for a document that eventually stops getting updated will result in a single generation of the file, the last update, kept for 7 years.

1 generation every day for a week

1 generation every week for a month

1 generation every month for a year

1 generation every year for 7 years

Local retention policy is married to online policy so unfortunately you can’t set local retention higher. But this option is still useful for quick restores that can be retrieved from local media without having to be pulled down from NetMass across the wire. The same compression and encryption applies to backup sets you store on locally-attached media. Server storage or a dense USB drive would work fine for this.

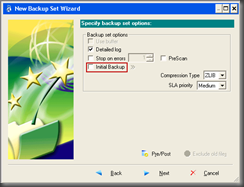

The next step sets up options in the backup set including taking an initial backup to local media if your online backup would otherwise take days. Once your initial backups are complete, NetMass will either come get the drive from you or you can send it to them so the data can be put directly in the array. After the initial backup the mode switches to differential so much less data travels the internet.

Notification options are plentiful but your inbox can become overwhelmed easily with daily status messages. Scheduling options are also plentiful allowing granularity for as many schedules as you care to create.

As a final step, you can go ahead and make the new backup active by designating it as a regular set type, or you can make it statistical. Statistical set type will allow you to do a test run of the backup and estimate how much online storage that job will consume.

Restores

The restore process is very clean and can be executed on any backup set that has data online. Once you launch the wizard you can see the directories and files associated with a particular backup set. This is also one way to look at what and how much you have stored online. Clicking “file info” on any selected file shows you how many generations are stored online and the size of each. You can also look at only the files that were modified in any folder. The last step is deciding whether to restore files to their original location or an alternate.

The other way to look at what you’re storing online is to open the Online File Summary under Reports. This tells you almost everything you need to know about your online data and can chart certain filters as well. This is one area that could be made a little better I think. Too much digging is required to find out exactly what you have online with how many generations. If Asigra could tie this to the backup set information better, in the same context, I think it would be much more user friendly overall.

Backup sets can be suspended or deleted outright but you can get much more granular with selective backups to remove any online generations of individual files. Overall I am very impressed with the ServerSafe offering from NetMasss and think it is a great next gen offsite backup solution. I’m currently running my trial DS-Client on an old P4 PC running XP Pro and the jobs are completing with no problem. I have roughly 100GB worth of data being protected now. While a large company may not find much value paying NetMass to store their backup data, they could implement the Asigra DS solution and create an e-vaulting architecture of their own, in-house. We all hate tapes and this is a solid alternative. The trial of ServerSafe is a snap and can seamlessly transition into a production state as soon as you say you’re ready. NetMass is all about low pressure and let’s you become acclimated at your own pace. I look forward to see what’s new in the next major release of the DS-Client.

Great post! Thanks you so much for the share. It is indeed a helpful one.

ReplyDeleteData Protection Company in Singapore | Webroot