Hyper-V Design for NUMA Architecture and Alignment

I’m publishing this design topic separately for both Hyper-V and vSphere, assuming that the audience will differ between the two. Some of this content will be repeated between posts but arranged to focus on the hypervisor each prospective reader will likely care most about.

Be sure to also check out: vSphere Design for NUMA Architecture and Alignment (coming soon)

Non-Uniform Memory Access (NUMA) has been with us for awhile now and was created to overcome the scalability limits of the Symmetric Multi-Processing (SMP) CPU architecture. In SMP, all memory access was tied to a singular shared physical bus. There are obvious limitations in this design, especially with higher CPU counts and increased traffic on the bus.

NUMA was created to limit the number of CPUs tied to a single memory bus and as a result defines what is construed as a NUMA node. A high performing NUMA node is defined by the memory directly attached to a physical CPU socket accessed directly by consuming applications and services.

NUMA spanning occurs when memory is accessed remote to the NUMA node an application, service or VM is connected to. NUMA spanning means your application or service won’t run out of memory or CPU cores within its NUMA node as it can access the remote DIMMs or cores of an adjacent CPU. The problem with NUMA spanning is that your application or service has to cross the lower performing interconnects to store its pages in the remote DIMMs. This dialog in Hyper-V Settings explains the situation fairly well:

Running many smaller 1 or 2 vCPU VMs with lower amounts of RAM, such as virtual desktops, aren’t as big of a concern and we generally see very good oversubscription at high performance levels. In the testing my team does at Dell for VDI, we see somewhere between 9-12 vCPUs per pCPU core depending on the workload and VM profile. For larger server-based VMs, such as those used for RDSH sessions, this ratio is smaller and more impactful because there are fewer overall VMs consuming a greater amount of resources. In short, NUMA alignment is much more important when you have VMs with larger vCPU and RAM allocations.

To achieve sustained high performance for a dense server workload, it is important to consider:

Hyper-V automatically assigns VMs to NUMA nodes and balances the load between them. Changing this same VM to an allotment of 13 vCPUs, 1 vCPU beyond the configured NUMA boundary, has immediate affects. Now this VM is spanning across 2 NUMA nodes/ pCPU sockets for its vCPU entitlement. CPU contention could occur at this point with other VMs as load increases but memory pages will not become remote unless the memory available to a local NUMA node is exceeded. The same thing happens here if you assign a memory allocation larger than a single NUMA node.

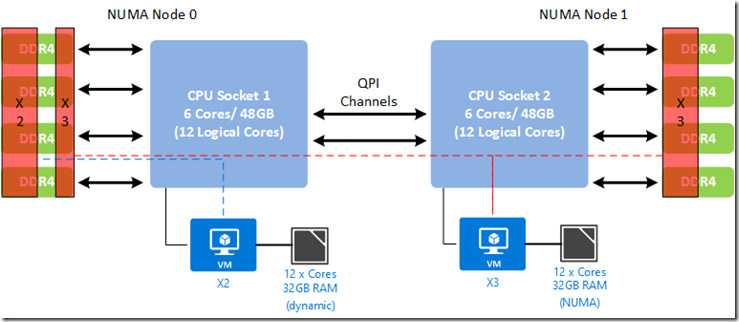

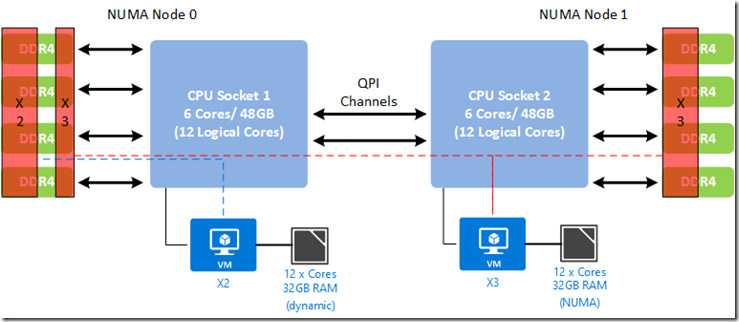

To illustrate what is happening here, consider the following drawing. The X3 VM having 13 vCPUs is exceeding the configured maximum of processors for its NUMA topology and must now span 2 physical sockets. Notice that the assigned virtual memory in this configuration is still local to each VM’s primary NUMA node.

For a VM to actually be able to store memory pages in a remote NUMA node, the key change that must be made is called out on the main processor tab for the VM spanning NUMA: Dynamic memory must be disabled to allow vNUMA. If dynamic memory is enabled on a VM, no NUMA topology is exposed to the VM, regardless of what settings you change! Note here also that there is no way to set CPU affinity for a VM in Hyper-V and generally you should never need to. If you want to guarantee a certain percentage of CPU for a VM, set its “Virtual Machine Reserve %” accordingly.

NOTE - Remember that if you use Dynamic Memory, you need to carefully consider the values for: Startup, min, max and buffer. What you configure for Startup here is what your VM will boot with and consume as a minimum! If you set your startup to 32GB, your VM will consume 32GB with no workload, reducing what is available to other VMs. Configure your max RAM value as the top end amount you want your VM to expand to.

To illustrate the effects of an imbalanced configuration, consider the following PowerShell output. My X2 VM has been assigned 32GB RAM with Dynamic Memory enabled. My X3 VM has also been assigned 32GB RAM but with Dynamic Memory disabled thus forcing NUMA spanning. The “remote physical pages” counters indicate NUMA spanning in action with the X3 VM currently storing 6% of its memory pages in the remote NUMA node. This is with absolutely no workload running mind you. Lesson: In most cases, you want to leave Dynamic Memory enabled to achieve the best performance and balance of resources! Commands used below:

Here is a visual of what’s happening with X3 spanning NUMA. A percentage of its memory pages are being stored in the remote NUMA node 0 which requires crossing the QPI links to access, thus reducing performance:

Look what happens when I re-enable Dynamic Memory on X3. You want to always see the values for “remote physical pages” as 0:

The risk of this happening is greater with VMs with heavy resource requirements. There may be some instances where the performance tradeoff is worth it, simply because you have a VM that absolutely requires more resources. Look at what happens when I bring my X1 VM online configured with 64GB static RAM on the same server:

It is immediately punted to the second server in my cluster that has enough resources to power it on. If the cluster had too few resources available on any node, the VM would fail to power on.

It’s worth noting that if you have your VMs set to save state when the Hyper-V host shuts down, be prepared to consume a potentially enormous amount of disk. When this is enabled Hyper-V will write a BIN file equal to the amount of RAM in use by the VM. If you disabled Dynamic Memory then the BIN file will be the full amount of assigned RAM.

32GB on disk for my X3 VM with Dynamic Memory disabled:

Hyper-V Virtual NUMA Overview

Hyper-V 2012 Checklist

Be sure to also check out: vSphere Design for NUMA Architecture and Alignment (coming soon)

Non-Uniform Memory Access (NUMA) has been with us for awhile now and was created to overcome the scalability limits of the Symmetric Multi-Processing (SMP) CPU architecture. In SMP, all memory access was tied to a singular shared physical bus. There are obvious limitations in this design, especially with higher CPU counts and increased traffic on the bus.

NUMA was created to limit the number of CPUs tied to a single memory bus and as a result defines what is construed as a NUMA node. A high performing NUMA node is defined by the memory directly attached to a physical CPU socket accessed directly by consuming applications and services.

NUMA spanning occurs when memory is accessed remote to the NUMA node an application, service or VM is connected to. NUMA spanning means your application or service won’t run out of memory or CPU cores within its NUMA node as it can access the remote DIMMs or cores of an adjacent CPU. The problem with NUMA spanning is that your application or service has to cross the lower performing interconnects to store its pages in the remote DIMMs. This dialog in Hyper-V Settings explains the situation fairly well:

What is NUMA alignment?

In the case of virtualization, VMs created on a physical server receive their virtual CPU (vCPU) allotments from the physical CPU cores of their hosts (obviously). There are many tricks used by hypervisors to oversubscribe CPU and run as many VMs on a host as possible while ensuring good performance. Better utilization of physical resources = better return on investment and use of the company dollar. To design your virtual environment to be NUMA aligned means ensuring that your VMs receive vCPUs and RAM tied to a single physical CPU (pCPU), thus ensuring the memory and pCPU cores they access are directly connected and not accessed via traversal of a CPU interconnect (QPI). As fast as interconnects are these days, they are still not as fast as the connection between a DIMM and pCPU.Running many smaller 1 or 2 vCPU VMs with lower amounts of RAM, such as virtual desktops, aren’t as big of a concern and we generally see very good oversubscription at high performance levels. In the testing my team does at Dell for VDI, we see somewhere between 9-12 vCPUs per pCPU core depending on the workload and VM profile. For larger server-based VMs, such as those used for RDSH sessions, this ratio is smaller and more impactful because there are fewer overall VMs consuming a greater amount of resources. In short, NUMA alignment is much more important when you have VMs with larger vCPU and RAM allocations.

To achieve sustained high performance for a dense server workload, it is important to consider:

- The number of vCPUs assigned to each VM within a NUMA node to avoid contention

- The amount RAM assigned to each VM to ensure that all memory access remains within a physical NUMA node.

NUMA Alignment in Hyper-V

Let’s walk through a couple of examples in the lab to illustrate this. My Windows cluster consists of:- 2 x Dell PowerEdge R610’s with dual X5670 6-core CPUs and 96GB RAM running Server 2012 R2 Datacenter in a Hyper-V Failover Cluster. 24 total logical processors seen by Windows (hyperthreaded).

- 3 x 2012 R2 VMs named X1, X2, X3 each configured with 12 x vCPUs, 32GB RAM

Hyper-V automatically assigns VMs to NUMA nodes and balances the load between them. Changing this same VM to an allotment of 13 vCPUs, 1 vCPU beyond the configured NUMA boundary, has immediate affects. Now this VM is spanning across 2 NUMA nodes/ pCPU sockets for its vCPU entitlement. CPU contention could occur at this point with other VMs as load increases but memory pages will not become remote unless the memory available to a local NUMA node is exceeded. The same thing happens here if you assign a memory allocation larger than a single NUMA node.

To illustrate what is happening here, consider the following drawing. The X3 VM having 13 vCPUs is exceeding the configured maximum of processors for its NUMA topology and must now span 2 physical sockets. Notice that the assigned virtual memory in this configuration is still local to each VM’s primary NUMA node.

For a VM to actually be able to store memory pages in a remote NUMA node, the key change that must be made is called out on the main processor tab for the VM spanning NUMA: Dynamic memory must be disabled to allow vNUMA. If dynamic memory is enabled on a VM, no NUMA topology is exposed to the VM, regardless of what settings you change! Note here also that there is no way to set CPU affinity for a VM in Hyper-V and generally you should never need to. If you want to guarantee a certain percentage of CPU for a VM, set its “Virtual Machine Reserve %” accordingly.

NOTE - Remember that if you use Dynamic Memory, you need to carefully consider the values for: Startup, min, max and buffer. What you configure for Startup here is what your VM will boot with and consume as a minimum! If you set your startup to 32GB, your VM will consume 32GB with no workload, reducing what is available to other VMs. Configure your max RAM value as the top end amount you want your VM to expand to.

To illustrate the effects of an imbalanced configuration, consider the following PowerShell output. My X2 VM has been assigned 32GB RAM with Dynamic Memory enabled. My X3 VM has also been assigned 32GB RAM but with Dynamic Memory disabled thus forcing NUMA spanning. The “remote physical pages” counters indicate NUMA spanning in action with the X3 VM currently storing 6% of its memory pages in the remote NUMA node. This is with absolutely no workload running mind you. Lesson: In most cases, you want to leave Dynamic Memory enabled to achieve the best performance and balance of resources! Commands used below:

Get-VM

Get-VMProcessor

Get-counter “\hyper-v VM VID Partition(*)\*”

Here is a visual of what’s happening with X3 spanning NUMA. A percentage of its memory pages are being stored in the remote NUMA node 0 which requires crossing the QPI links to access, thus reducing performance:

Look what happens when I re-enable Dynamic Memory on X3. You want to always see the values for “remote physical pages” as 0:

The risk of this happening is greater with VMs with heavy resource requirements. There may be some instances where the performance tradeoff is worth it, simply because you have a VM that absolutely requires more resources. Look at what happens when I bring my X1 VM online configured with 64GB static RAM on the same server:

It is immediately punted to the second server in my cluster that has enough resources to power it on. If the cluster had too few resources available on any node, the VM would fail to power on.

It’s worth noting that if you have your VMs set to save state when the Hyper-V host shuts down, be prepared to consume a potentially enormous amount of disk. When this is enabled Hyper-V will write a BIN file equal to the amount of RAM in use by the VM. If you disabled Dynamic Memory then the BIN file will be the full amount of assigned RAM.

32GB on disk for my X3 VM with Dynamic Memory disabled:

Key Takeaways

- Designing your virtual infrastructure to work in concert with the physical NUMA boundaries of your servers will net optimal performance and minimize contention, especially for VMs with large resource assignments.

- A single VM consuming more vCPUs than a single NUMA node contains, will be scheduled across multiple physical NUMA nodes causing possible CPU contention as loads increase.

- A single or multiple VMs consuming more RAM than a single NUMA node contains will span NUMA and store a percentage of their memory pages in the the remote NUMA node causing reduced performance.

- In Hyper-V, in most cases, you want to leave Dynamic Memory enabled so the server can adjust using a balloon driver as required.

- CPU affinity does not exist in Hyper-V. If you want to assign a VM a guaranteed slice of CPU, set its “virtual machine reserve %” in its CPU settings.

- Virtual memory assignments are cumulative, more impactful when not using dynamic memory.

- Virtual CPU assignments are non-cumulative and will not force a VM to span NUMA as long as a vCPU assignment falls within the limits of a defined NUMA node. A vCPU assignment that exceeds the boundaries of a configured NUMA node will cause spanning.

- Be careful what you set your “automatic stop action” to in Hyper-V manager or SCVMM. If set to save state, large BIN files will be written to disk equaling the memory in use by each VM.

Resources:

http://lse.sourceforge.net/numa/faq/Hyper-V Virtual NUMA Overview

Hyper-V 2012 Checklist

Really detailed explanation. Resource distribution between VM's is definitely one of the important issues in virtualization.

ReplyDelete